When I connected my business bank account to Quickbooks, I quickly realized that the revenue numbers were wrong. Stripe takes a bite out of the transactions before sending it to my bank, so if I invoiced someone for $500, Stipe would charge a $15 fee and then my bank would see $485 as the revenue. This would be copied to Quickbooks, so my sales would look like $485, not $500.

However, there’s no native Stripe->Quickbooks connector. You can either install a third party extension (ಠ_ಠ) or download a CSV of transactions from Stripe and upload a CSV of transactions into Quickbooks.

I decided to try going the CSV route. Goals:

- Record the absolute sales amount, before any fees. E.g., $500

- Record the fee for the transaction. E.g., $500 * 2.9% -> -$14.50

- Record the refunds for that fees (since we have some fee-free processing for the first $N of purchases). E.g., +$14.50

- Record the fee for using the pleasure of Stripe billing E.g., $500 * .7% -> $-3.50

- Record the taxes on the fees Stripe charges (thanks Stripe). E.g., $3.50 * 9% = -$.32

Which nets out to $500 – $14.50 + $14.50 – $3.50 – $.32 = $496.18. And, of course, this is a single payment! Customers obviously aren’t perfectly synced on billing payouts, so Stripe extrudes money into our business bank account like a poorly-filled sausage of payment blops of weird amounts. This gets very complicated and annoying to untangle on the bank account side, so I’d like it to be really clearly laid out as Stripe transactions.

The Stripe Side

We want to get all of the Stripe transactions in some sensible, readable form. To do so, go to Stripe’s Balance summary page and set the time frame for what you want (e.g., last month, last year, etc.). Then scroll down to “Balance change from activity” and hit “Download.” Do the same for “Payouts”. Keep the defaults. You should end up with two CSVs downloaded. (If you like this system, you can subscribe at the top of the page to have Stripe email you the reports each month.)

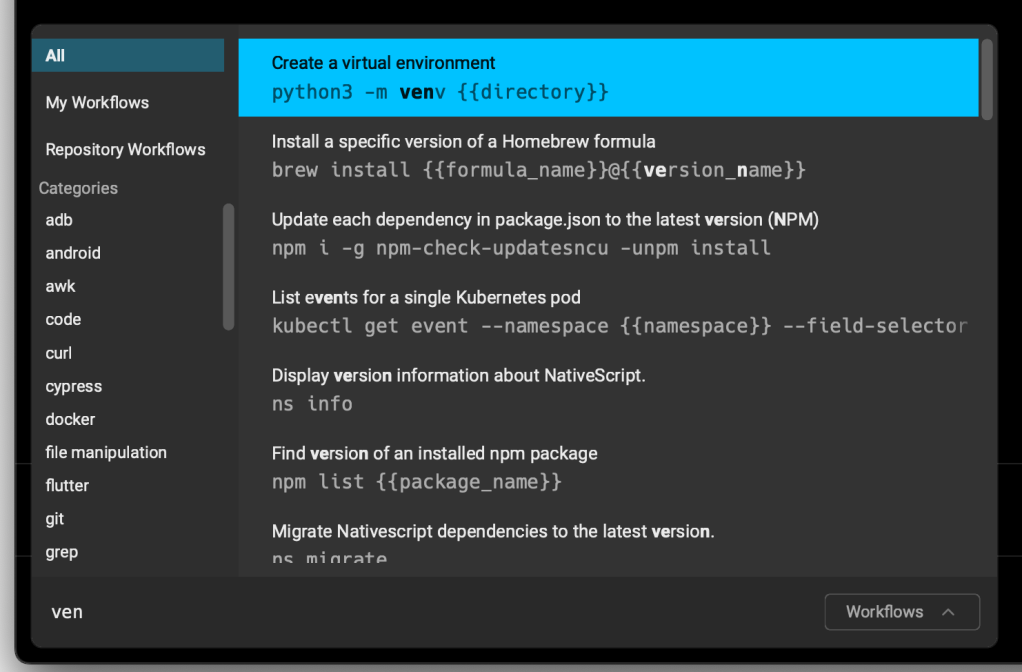

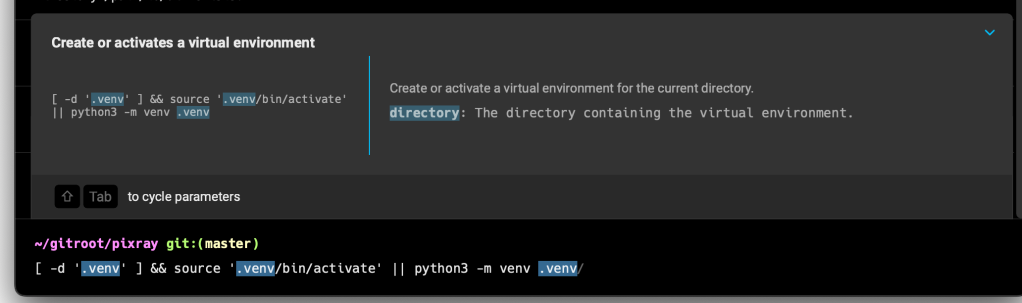

However, Quickbooks does not like the format Stripe presents these amounts in, so I put together a script to munge the CSV into a QBO-friendly form, which you can see/copy/run on Colab. If you don’t really care about the mechanics you can run the whole colab, download the output (stripe_2024-12.csv) and skip down to “The Quickbooks side” section of this post.

If you want to understand what’s going on: first let’s consider the balance change CSV (named something like Itemized_balance_change_from_activity_USD_daterange.csv). Stripe creates a table of the form (extra columns excluded for simplicity):

| Description | Created | Gross | Fee |

| Llama Brush | 2025-01-30 | $30 | $3 |

| Stripe Billing Usage Fee | 2025-01-29 | -$10 | $1 |

In QBO, we’d like to split gross and fee into two separate rows to look like:

| Description | Date | Amount |

| Llama Brush | 2025-01-30 | $30 |

| Llama Brush Fee | 2025-01-30 | -$3 |

| Stripe Billing Usage Fee | 2025-01-29 | -$10 |

| Stripe Billing Usage Fee Tax | 2025-01-29 | -$1 |

This takes a few steps: first, we want to make all of the fees negative. Then we want to split each row into two rows: one row for its actual amount and one row for its fees. Then we want everything to be named the way QBO expects (not necessary, but involves less clicks on import) and remove $0 rows.

Making the fees negative is easy, we just multiply that column by -1:

df = df.assign(fee=df.fee * -1)Splitting each row into two rows is a little more complex. We can use Pandas’s stack function, but it discards all of the other columns so we are going to “store” the other columns we want (created and description) in the index:

df = df.set_index(['created', 'description'])

# This makes the stacked index have the name "amount_type"

# (instead of "level_2").

df.columns.name = 'amount_type'

df = df[['gross', 'fee']].stack()

# This names the stacked column "amount" (instead of "0").

df.name = 'amount'Now we have a dataframe with three levels of index and one coumn.

Reset the index to get it back to “normal” data and one index.

df = df.reset_index()Then I’d like each “fee” description to be suffixed with “fee” and each tax description to be suffixed with tax:

# The usage fee fee is actually tax (I asked support because I

# was so annoyed).

tax_mask = (df.amount_type == 'fee') & df.description.str.contains('Usage Fee')

# Any fee that isn't a tax.

fee_mask = (df.amount_type == 'fee') & (~tax_mask)

df.loc[tax_mask, 'description'] = df['description'] + ' tax'

df.loc[fee_mask, 'description'] = df['description'] + ' fee'Finally, let’s do some housekeeping. Drop the “amount_type” column, rename “created” to “date”, and drop any rows that are $0 (Quickbooks doesn’t understand $0):

df = df.drop(columns=['amount_type']).rename(columns={'created': 'date'})

df = df[df.amount != 0]If we create a “Stripe” account in Quickbooks and upload this, it will have all of the income and fees we generated on Stripe. However, Quickbooks will entirely refuse to match this up with the Stripe payouts in our bank account, since the bank payouts are batched and won’t match up with any of the amounts in our current CSV. Thus, it’ll look like we earned this income twice! (Once in Stripe and once in our bank account, minus fees.) This is where our second “Payouts” CSV comes in.

Load it into a second dataframe. This one is much simpler, so we’ll just rename and pull out the columns we want, then concatenate it with our transactions dataframe.

payouts = pd.read_csv('...')

payouts = (

payouts

.rename(columns={'effective_at': 'date', 'gross': 'amount'})

.assign(

amount=lambda x: x.amount * -1,

description=lambda x: 'Payout ' + x.payout_id

)

[['date', 'amount', 'description']]

)

df = pd.concat([df, payouts]).sort_values('date')Last up, store it in a CSV:

df.to_csv('stripe_2024-12.csv', index=False, header=True)The Quickbooks Side

Now go to your Quickbooks account and create a Stripe “bank” account if one doesn’t exist already. Then under Transactions -> Bank Transactions select the arrow next to “Link Account” and select the “Upload File” option.

Under “Manually upload your transactions,” select the stripe_2024-12.csv (or whatever) file you created above and hit “Continue.” In “Which account are these transactions from?” select the “Stripe” account.

On the next page (“Let’s set up your file in QuickBooks”), most of the defaults are fine, but for “What’s the date format used in your file?” select the last option: yyyy-MM-dd. Hit “Continue.”

It’ll give you a preview of what it’s importing at that point. If it looks okay, hit the top checkbox to select them all and hit Continue. Then confirm yes, you really do want to import these.

Finally, you’ll get back to the transaction categorization screen! Quickbooks should, at this point, automatically match up your Stripe payouts to you bank account.

This gives me, finally, the view I want: actual top-line revenue, service fees, taxes paid, and payouts to the bank account.

Hopefully this will be helpful for other programmers dealing with Stripe + Quickbooks! However, I’m a newbie at Quickbooks, so let me know if you see any improvements I could make.